Overview

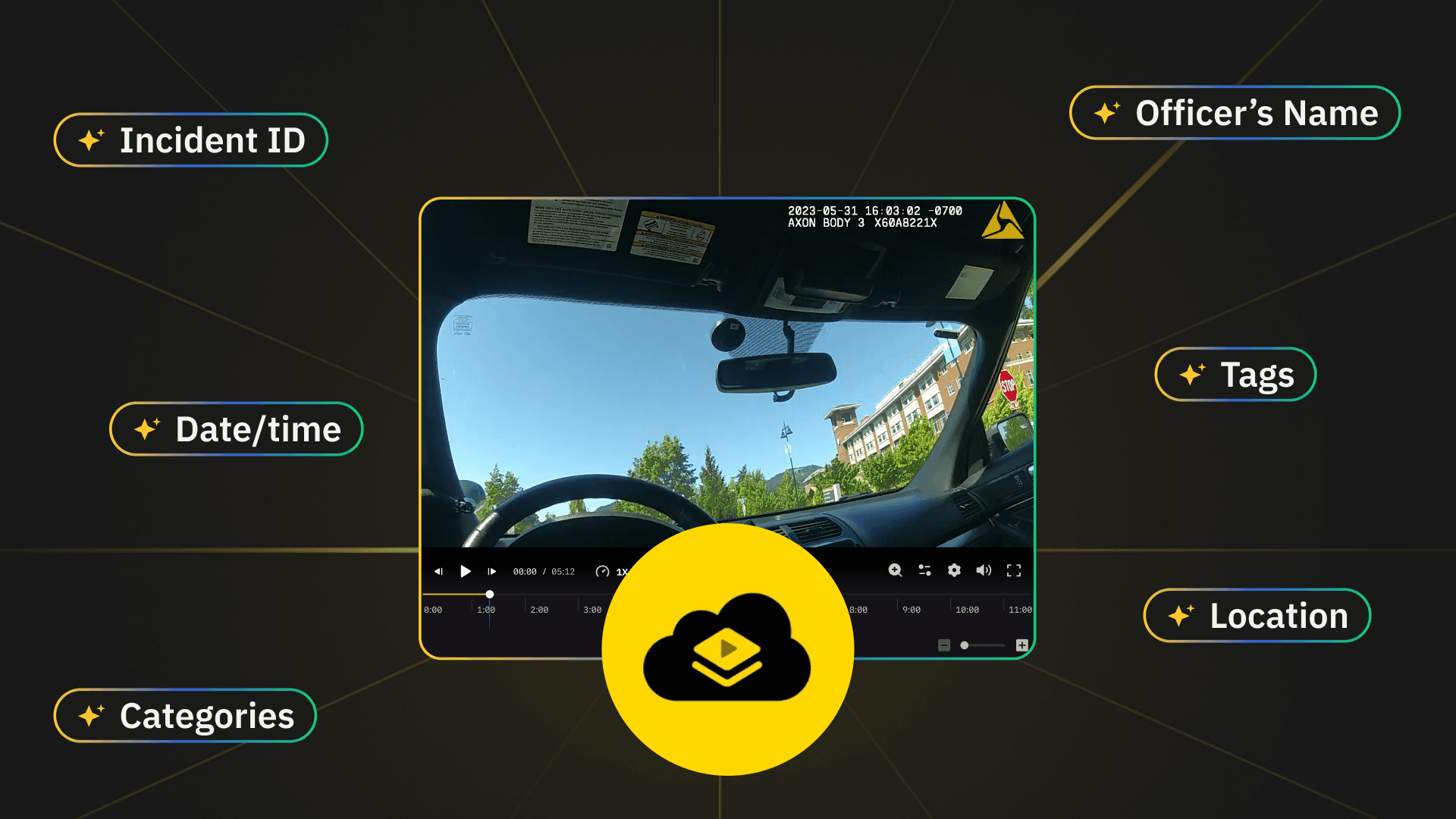

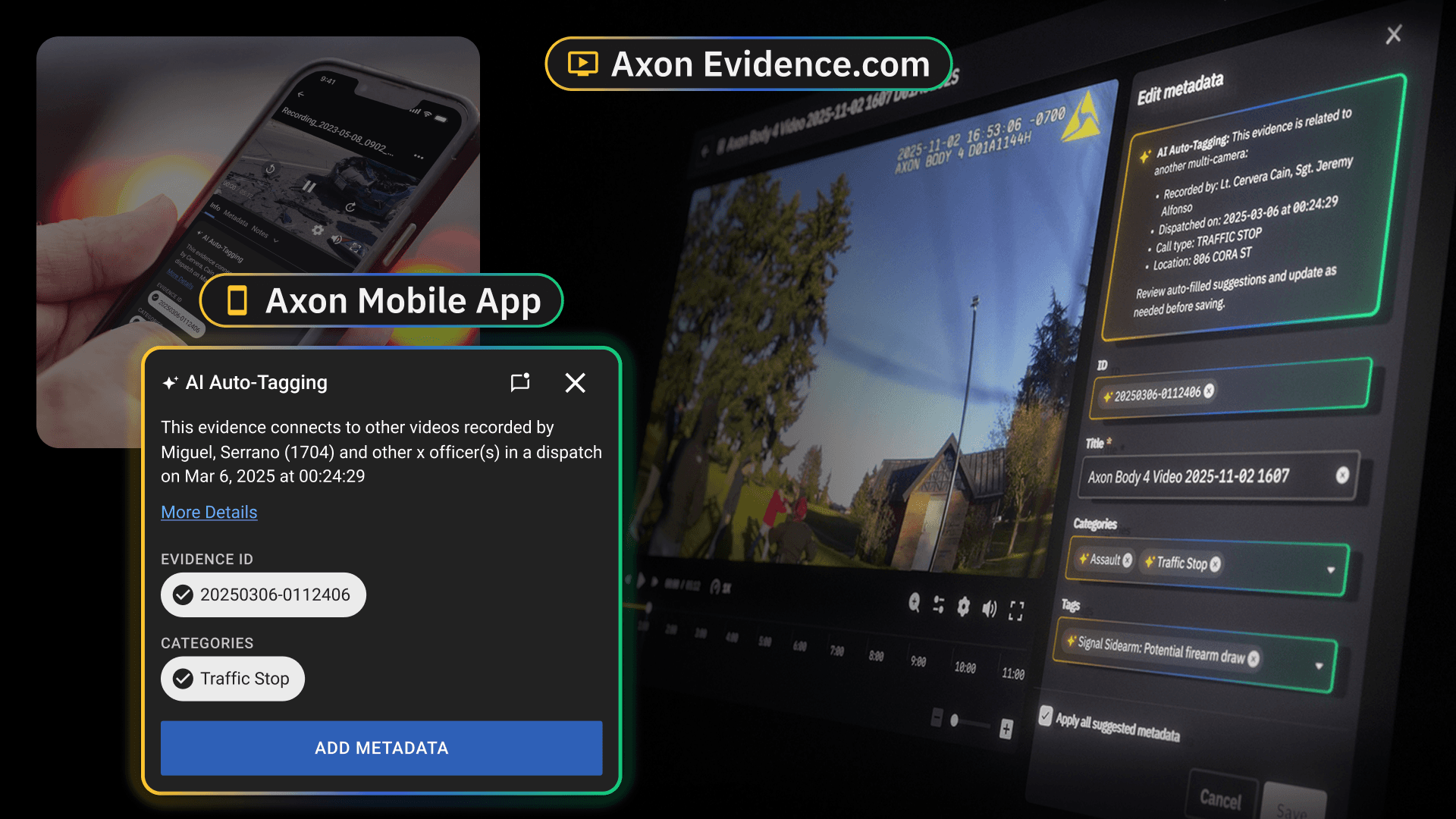

AI Auto-Tagging analyzes multi-source signals (camera overlap, transcripts, dispatch data) to automatically suggest precise metadata across Axon App and Evidence. This drastically reduces manual data entry, enabling officers to quickly validate information and ensuring the completeness and reliability of every case file.

Prioritizing high-utility function over visual flair to support a user base of over 1 million officers.

My Role

- Led end-to-end UX for AI Auto-Tagging across mobile and desktop, driving the process from discovery to delivery to accelerate evidence retrieval.

- Designed "human-in-the-loop" workflows for reviewing and editing AI-generated metadata, effectively balancing automation speed with user control and trust.

- Collaborated cross-functionally with AI/ML and Engineering teams to align design decisions with model behaviors, technical constraints, and rollout plans.

Reducing manual data entry with AI-driven metadata suggestions.

Measurable Outcome

Design Process

Research & Discovery

Understanding the problem space.

FOCUS POINTS:

Goal

Conducted stakeholder interviews to investigate why secondary officers' evidence remains untagged.

Analysis

Identified potential CAD metadata inputs and analyzed officer data entry habits.

Design

Drafted mobile user flows and early mockups to visualize metadata suggestions.

Research Plan

Planned validation for AI-tagging concepts and call-stacking behaviors.

Design Iteration & Usability Evaluation

prototyping and validating solutions.

FOCUS POINTS:

Goal

Define an intuitive and trustworthy way for officers to review AI-generated suggestions.

Analysis

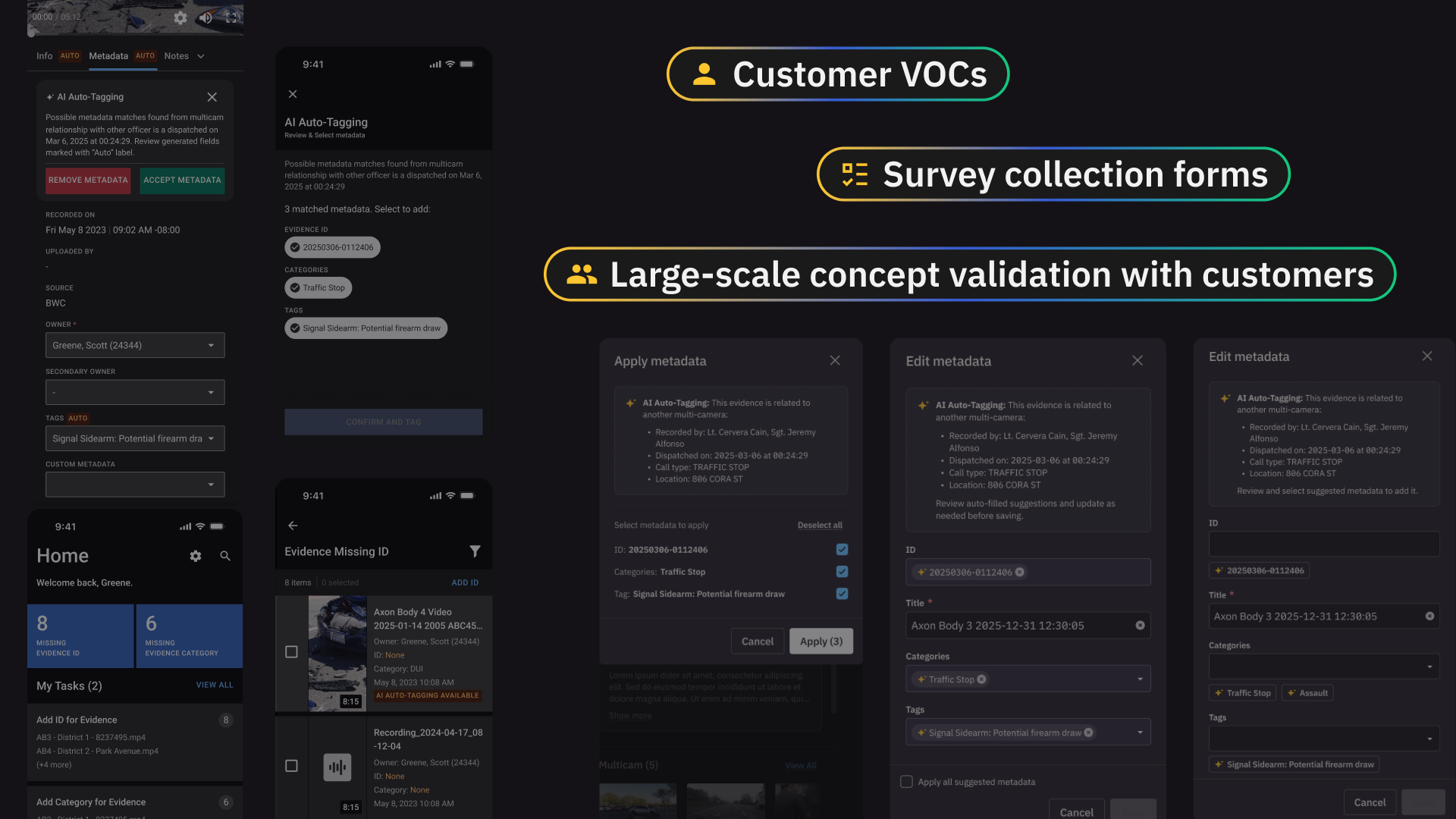

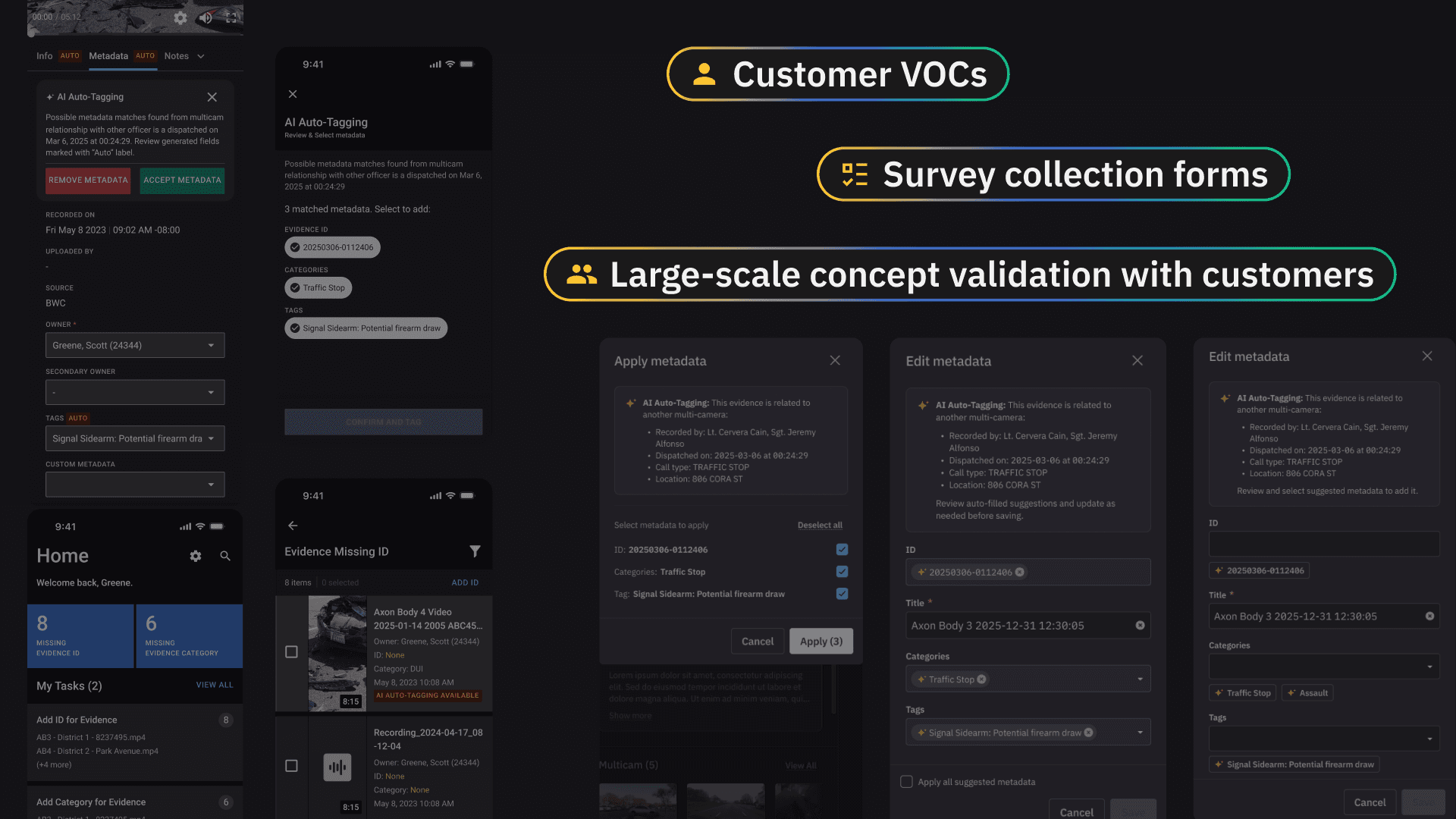

Conducted Voice of Customer (VOC) sessions with 24 agencies to gather direct feedback.

Design

Tested 3 distinct UI patterns: Pre-filled, Inline, and Selectable metadata.

Result

Validation: Confirmed 'Selectable Suggested Metadata' as the most intuitive interaction model via VOC testing.

Refinement: Optimized visibility, context cues, and confirmation flows to directly address agency feedback.

Final Prototype: Delivered a validated design featuring clear metadata context (Time, Location, Officer) and efficient single-tap acceptance.

Handoff & Implementation

Bringing the design to life.

FOCUS POINTS:

Goal

Ensuring design fidelity in production.

Design

Delivered precise specs, redlines, and QA support to ensure the final implementation matched the design vision.

Result

Post-Launch: Monitored deployment metrics to verify stability and user adoption in production.

Research & Discovery

Understanding the problem space.

FOCUS POINTS:

Goal

Conducted stakeholder interviews to investigate why secondary officers' evidence remains untagged.

Analysis

Identified potential CAD metadata inputs and analyzed officer data entry habits.

Design

Drafted mobile user flows and early mockups to visualize metadata suggestions.

Research Plan

Planned validation for AI-tagging concepts and call-stacking behaviors.

Design Iteration & Usability Evaluation

prototyping and validating solutions.

FOCUS POINTS:

Goal

Define an intuitive and trustworthy way for officers to review AI-generated suggestions.

Analysis

Conducted Voice of Customer (VOC) sessions with 24 agencies to gather direct feedback.

Design

Tested 3 distinct UI patterns: Pre-filled, Inline, and Selectable metadata.

Result

Validation: Confirmed 'Selectable Suggested Metadata' as the most intuitive interaction model via VOC testing.

Refinement: Optimized visibility, context cues, and confirmation flows to directly address agency feedback.

Final Prototype: Delivered a validated design featuring clear metadata context (Time, Location, Officer) and efficient single-tap acceptance.

Handoff & Implementation

Bringing the design to life.

FOCUS POINTS:

Goal

Ensuring design fidelity in production.

Design

Delivered precise specs, redlines, and QA support to ensure the final implementation matched the design vision.

Result

Post-Launch: Monitored deployment metrics to verify stability and user adoption in production.